Design a url shortener

Question

Step 1 - Understand the problem and establish design scope

Questions to ask

- Can you give an example of how a URL shortener work

- What is the traffic volume

- How long is the shortened URL

- What characters are allowed in the shortened URL?

- Can shortened URLs be deleted or updated?

Back of the envelope estimation

- Write operation: 100 million URL generated per day

- That is 1160 per second

- Read operation: 10:1

- Hence 11600 read per second

- Assume the service will run for 10 years

- 365 billion records

- Average URL length is 100

- 365 billion * 100 bytes

- 365 TB

TO CLARIFY

KB -> 1,000

MB -> 1,000,000

GB -> 1,000,000,000

TB -> 1,000,000,000,000

Step 2 - Propose high-level design and get buy-in

API Endpoints

POST api/v1/data/shorten

- request parameter: {longURL: longURLString}

- return shortURL

GET api/v1/shortUrl

- Return longURL for HTTP redirection

URL redirecting

- once the short URL inputted and received by the server, it changes to the long URL with 301 HTTP redirect

HTTP redirect

Hash function of 1 to 1 mapping

Step 3 - Design deep dive

Data model

- Since using in memory hash table is not feasible for real world system

- We can use relational database for such one to one mapping

Hash function

- 10 + 26 + 26 possible character values for all letter and numbers

- Hence need 7 digits (3.5 trillion) to hold all URLs

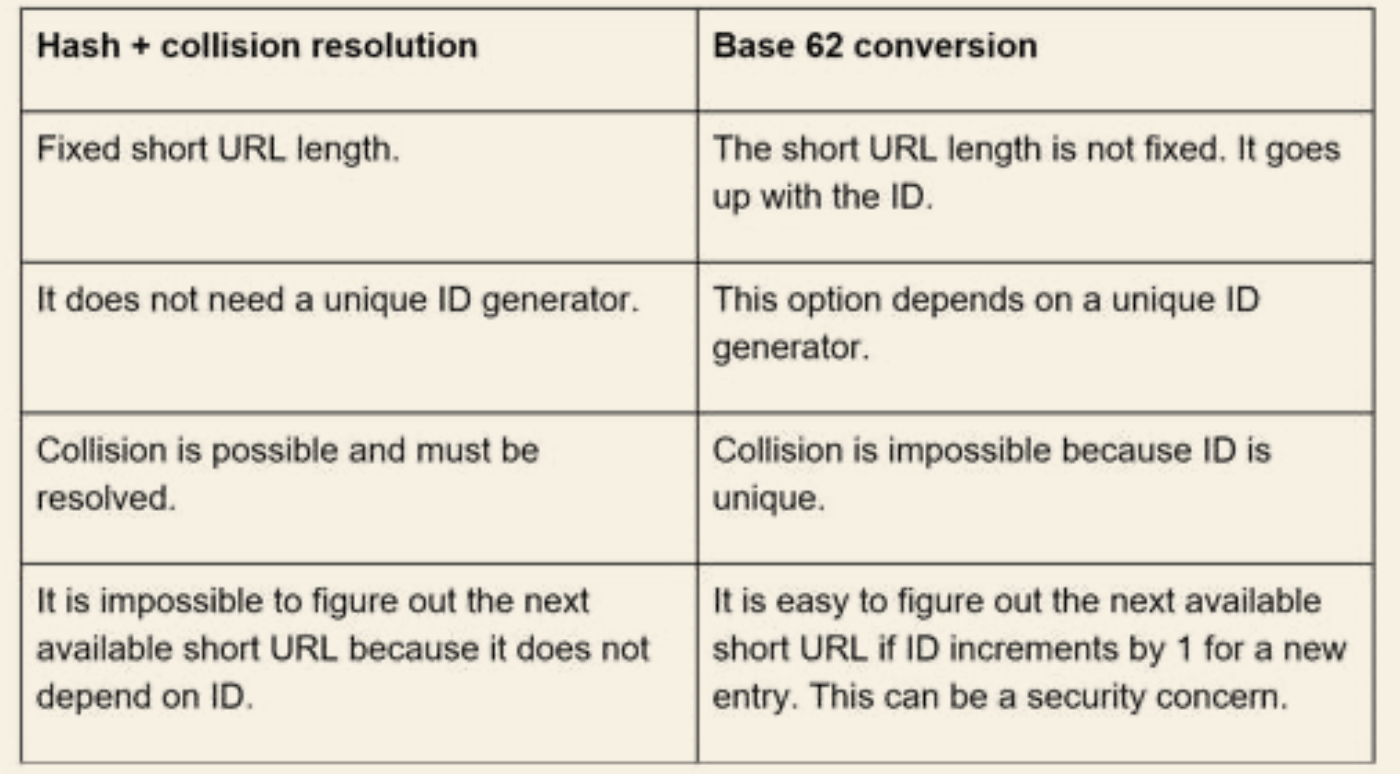

Collision

- Most common functions such as CRC32, MD5 or SHA-1 have more than 7 characters, hence we are only using the first seven

- However, this may introduce hash collision

- We can add predefined string to the long URL and re-hash if the URL already exist in the database

- However expensive to make multiple request to DB

- use bloom filter to improve

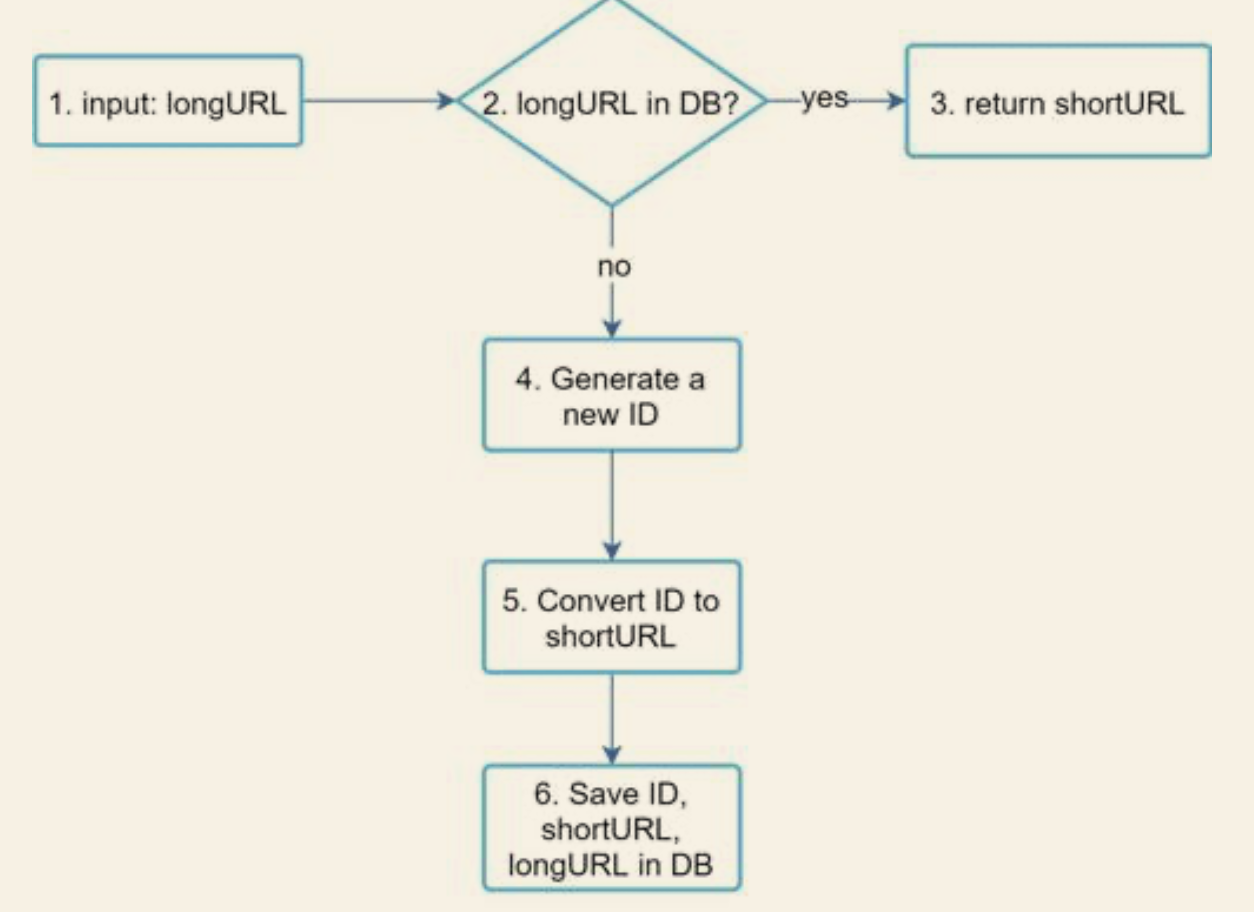

Base 62 conversion

- Or simply turn the ID of the url into another digit base to reduce the length

URL shortening deep dive

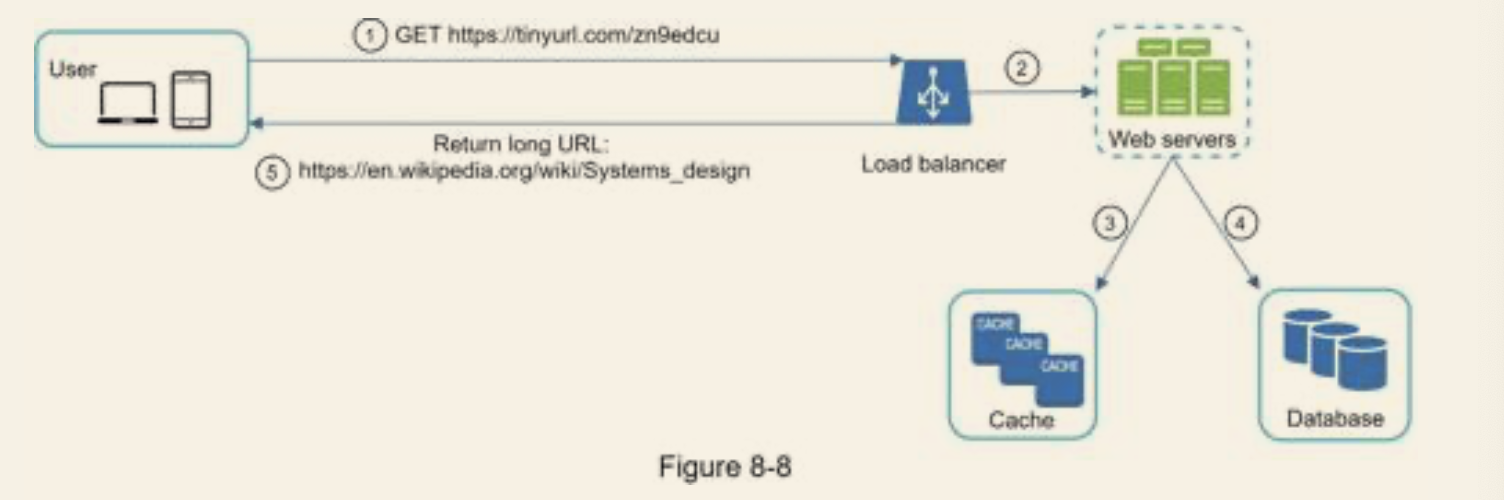

URL redirecting infrastructure

- Load balancer manages the incoming request

- If in cache, return immediately

- Else search the database

Step 4 - Wrap up

Topics to discuss

- Rate limiter to avoid malicious requests

- Web-server scaling - since stateless, hence easy to scale up

- Database scaling - replication and sharding

- Analytics - like the volume of clicks, when do they click...

- Availability, consistency and reliability