Design a rate limiter

Rate Limiter

- Benefits

- Prevent resource starvation caused by Denial of Service (DoS) attack.

- Reduced cost.

- Especially for companies that relies on third party APIs

- Prevent server from being overloaded

- Filter out bots or user's misbehaviour

Step 1 - Understand the problem and establish design scope

Questions to ask

- What kind of rate limiter?

- Server or client side

- Does rate limiter throttle based on IP, user ID or other properties

- What is the scale

- Startup or large companies

- Will the system wok in a distributed environment?

- Is the rate limiter a seperate service or should it be implemented in application code?

- Do we need to inform user who are throttled?

Example requirements

-

Accurately limit excessive requests

-

Low latency (not slow down HTTP response time)

-

Distributed rate limiting (across multiple services and processes)

-

High fault tolerance

-

Limited memory as possible

-

Exception handling (Show clear exception message to throttled users)

Step 2 - Propose high-level design and get buy-in

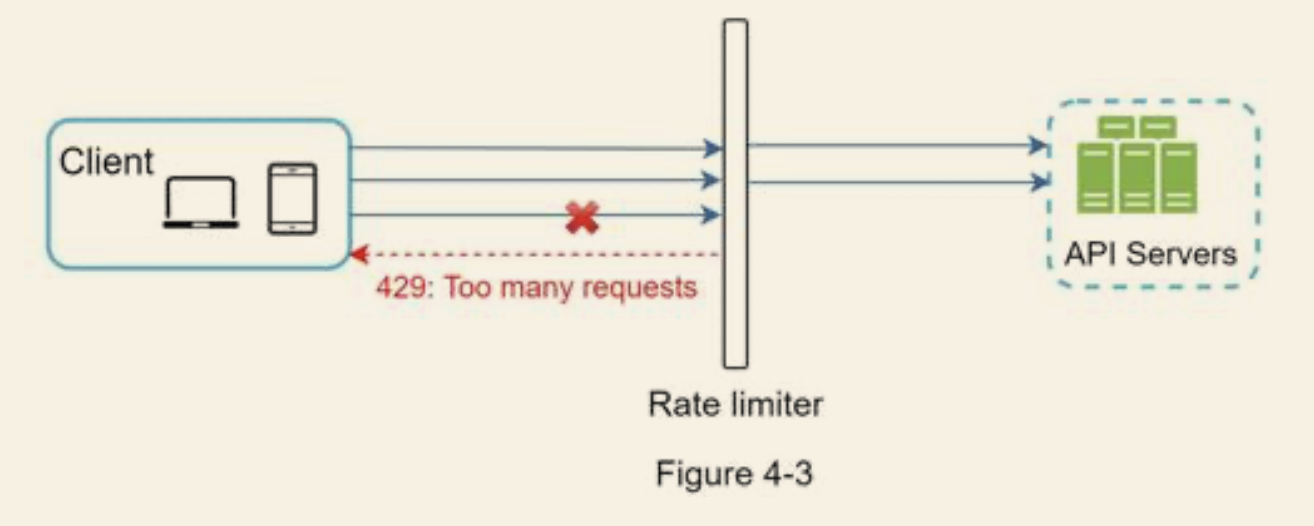

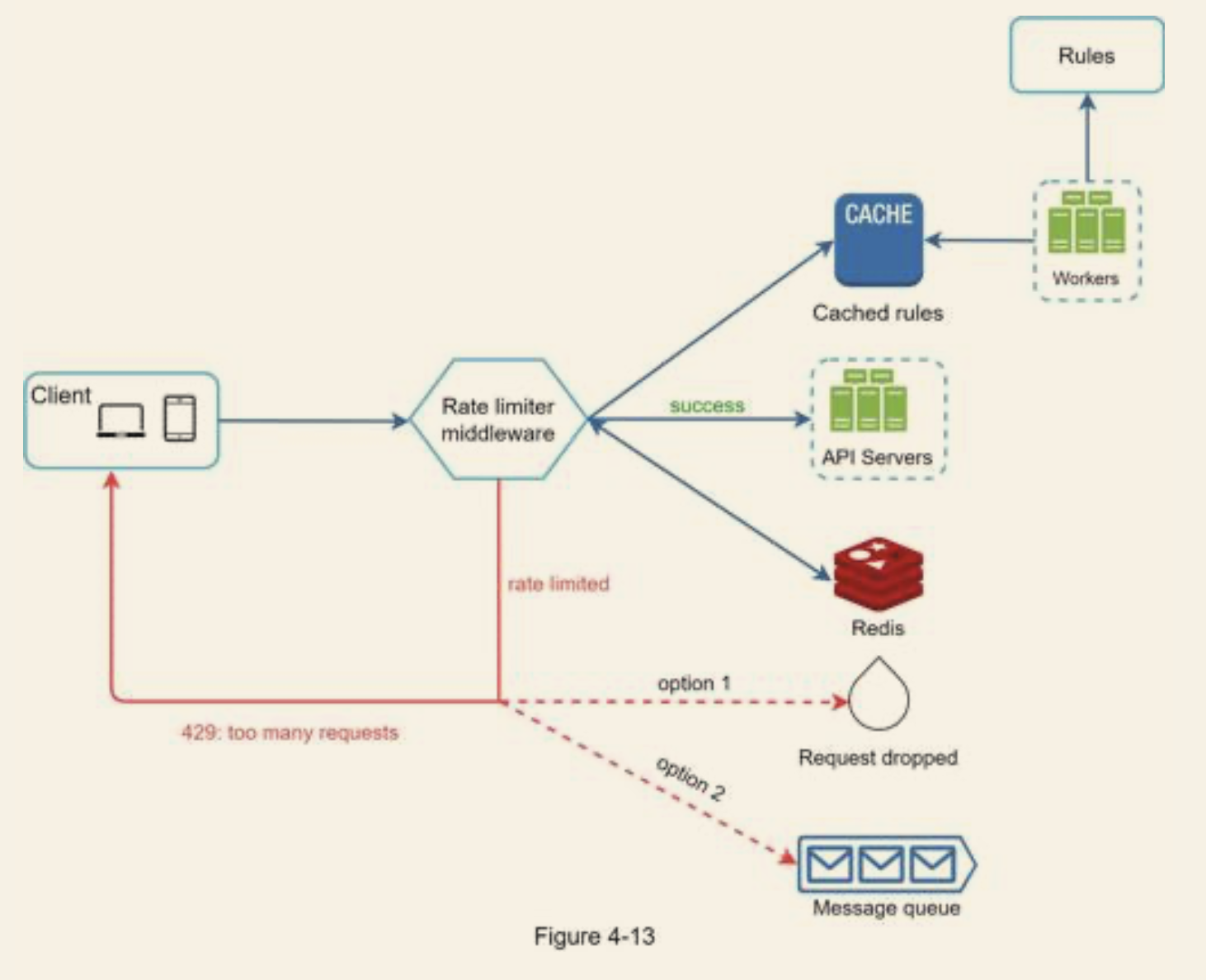

- Can be implemented on client-side, server-side, or a middleware between client and server

- Usually not in client side, since the requests can be easily forged into malicious ones.

- Return HTTP 429 if exceeds, which indicates too many request from the client side

- Since cloud micro-services have become widely used, rate limiting is usually implemented within a component called API gateway.

How to implement the rate limiter

- Evaluate the technology stack, make sure current programming language is sufficient to implement rate limiter on the server-side.

- Whether you are using a third-party gateway or not, determines whether you have the full control over the implementation and algorithms

- Whether you are using micro-services or not

- Building own rate limiter takes time and effort, evaluate based on engineering resources

Algorithms

Token bucket

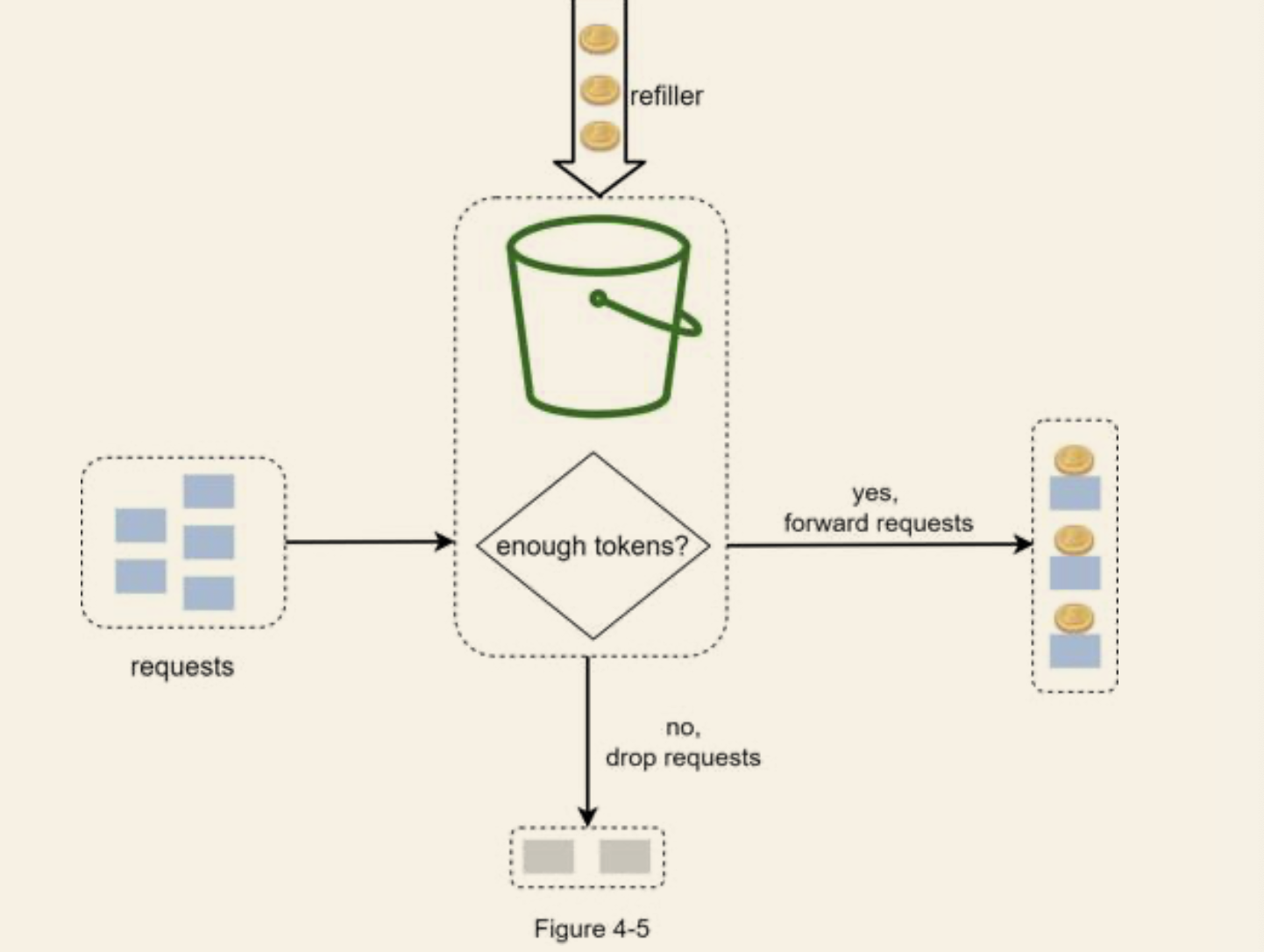

- Details

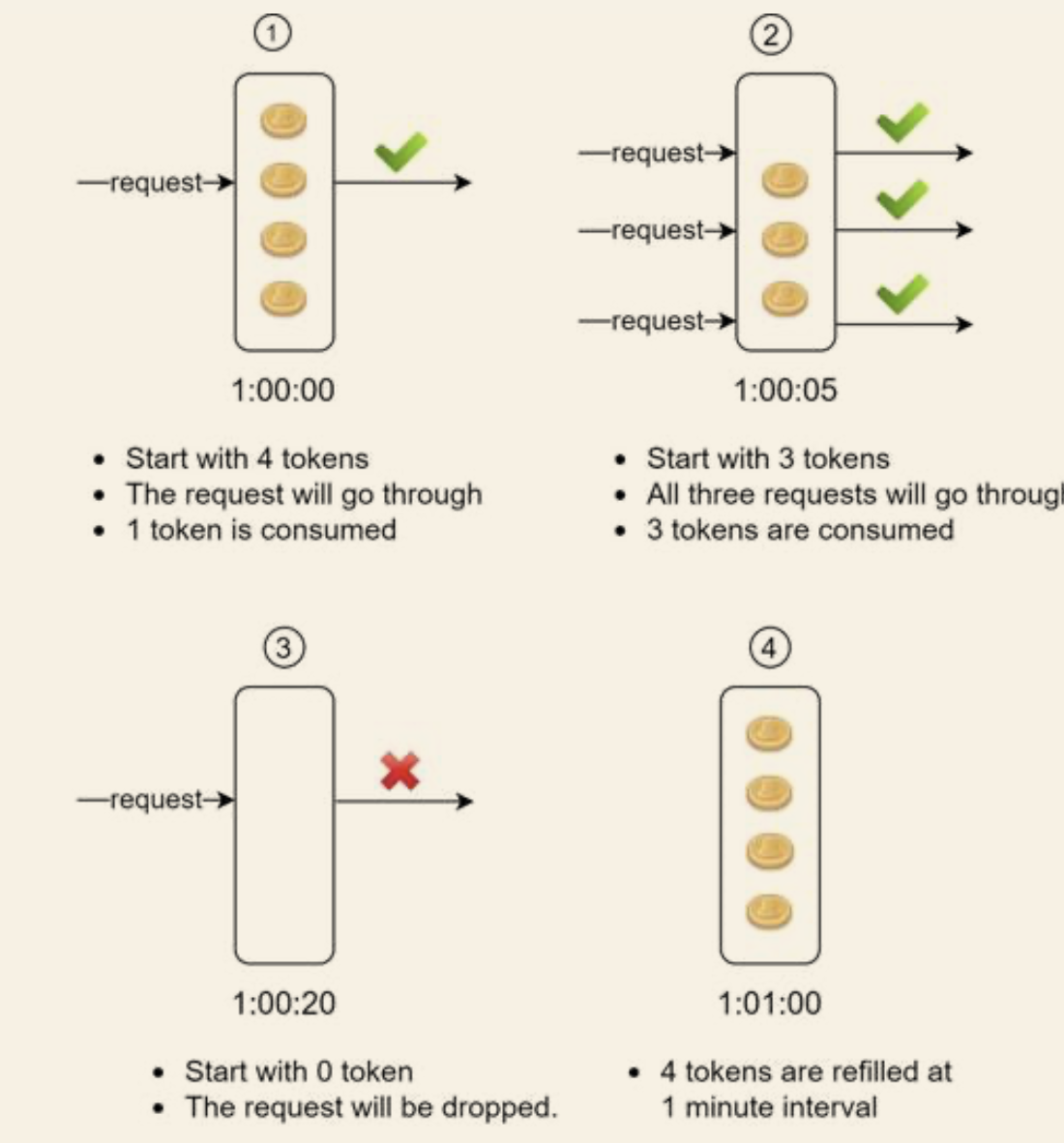

- We have a bucket with predefined "tokens" capacity

- There is a "re-filler", which insert tokens of certain quantity to the bucket per time unit

- When there are not enough tokens, the request is dropped, else, the request is forwarded along the token

- Algorithm

- Bucket size

- Re-fill rate - Number of token put into bucket every second

- How many bucket do we need? (Clarify this requirement)

- Create new bucket for each API endpoints - such as friend request, post...

- Based on IP address

- If have a overall request limit, just have a global bucket shared by all requests

- Pro:

- Easy to implement

- Memory efficient

- Allows burst of request as long as there are tokens left

- Cons:

- Limited parameter to fine tune algorithm to more specific needs

Leaking bucket algorithm

- Detail

- Similar to token bucket, but requests are processed at a fixed rate

- FIFO queue

- When request arrives, check whether the queue is full, drop the request if full

- Request are pulled from the queue and processed at regular intervals.

- Algorithm

- Bucket size

- Outflow rate

- Pros:

- Memory efficient (limited queue size)

- User cases where stable outflow is required

- Cons:

- Burst of request fill up the queue with old requests first, if they are not processed in time, it will block the new requests

Fixed window counter

- Details

- Divide timelines into fixed size time window, and assign counter to each window

- Each request increment the counter by one

- Once the counter reaches the predefined threshold, requests are dropped until a new time window starts

- Pros:

- Memory efficient

- Easy to understand

- Setting quota per time interval fits certain use cases

- Cons:

- Spike in request volume at the edges of time interval

- The last few request from the previous time interval plus the first few request in the next interval, may exceed the quota of this period of interval (the interval starts from the middle of the previous interval to the middle of current interval)

- Spike in request volume at the edges of time interval

Sliding window log

- Details

- Keep tracks of request timestamps

- Kept in cache

- When new request comes in, remove all outdated ones

- Add the timestamp for the new request to the log

- Keep tracks of request timestamps

- Pros:

- Accurate

- Cons:

- Memory heavy, even rejected timestamp might still be stored

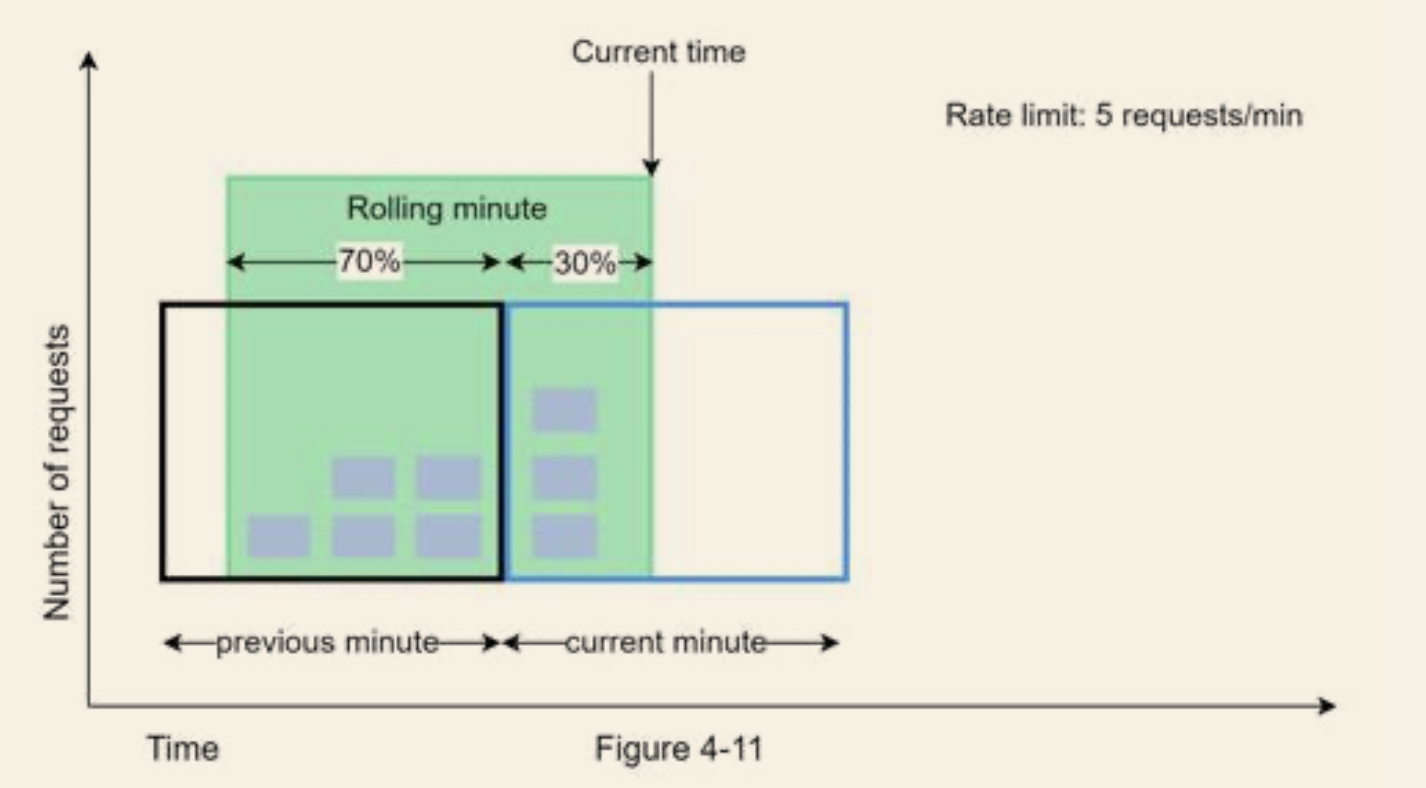

Sliding window counter

- Details

- Combines the previous two

Requests in current window + requests in the previous window * overlap percentage of the rolling window and previous window

- Pros:

- Smooth out spike of traffic, since the rate is based on the average rate of the previous window

- Memory efficient

- Cons:

- Assumes that the request in previous window is evenly distributed

High level architecture

- Keep a tracker on how many request from same user, IP address, etc.

- Keep it in an In-memory cache to avoid slowness of disk access

- Redis, which offer two commands

- INCR: Increment the counter by 1

- EXPIRE: Sets a timeout for the counter, delete if exceeds the time

Step 3 - Design deep dive

Rate limiting rules

- Lyft open-sourced their rate-limiting components:

domain: auth

descriptors:

- key: auth_type

Value: login

rate_limit:

unit: minute

requests_per_unit: 5

- Clients are not allowed to login more than 5 times in 1 minute

Exceeding the rate limit

- API returns a HTTP response code 429.

- In some cases you can keep the requests and process them later

- Information such as remaining number of allowed request within the window, number of second to wait make request are with the returned HTTP headers

Distributed environment

Race condition

- Locks are the most obvious solution

- However significantly slow down the system

- Work arounds:

- Lua script

- Sorted sets data structure in Redis

Synchronisation issue

- With multiple rate limiter, since the web tier is stateless, therefor the all rate limiter should have these information from Redis

- Use centralised data store like Redis

Performance optimisation

- Multi-data center setup is crucial to server across different geo-locations

- Use eventual consistency model

Monitory

- Monitor the rate limiting algorithm and rules (to ensure they are effective)

- When too many valid request are dropped

- Or to accommodate spike in traffic

Step 4 - Wrap up

Additional discussions

Hard vs soft rate limiting

- Hard: Cannot exceed the threshold

- Soft: Can exceed the threshold for a short period

Rate limiting on different HTTP layer

Design client to avoid being rate limited

- Avoid making frequent API calls

- Catch and inform exceptions

- Add sufficient back off time to retry logic