Design a key-value store

Question

- The size of a key-value pair is small: less than 10 KB.

- Ability to store big data.

- High availability: The system responds quickly, even during failures.

- High scalability: The system can be scaled to support large data set.

- Automatic scaling: The addition/deletion of servers should be automatic based on traffic.

- Tunable consistency.

- Low latency.

Single server key-value store

- Store all key-value pairs in a hash table

- Can be optimised using:

- Data compression

- Store frequent data in memory and the rest on disk

Distributed key-value store

Data partition

- Use Consistent Hashing

- Minimise data movement in case of node are added or removed

- Distribute data across servers evenly

Automatic scaling

- Servers should be added and removed automatically depending on the load

Heterogeneity

- Number of virtue node is proportional to the server capacity

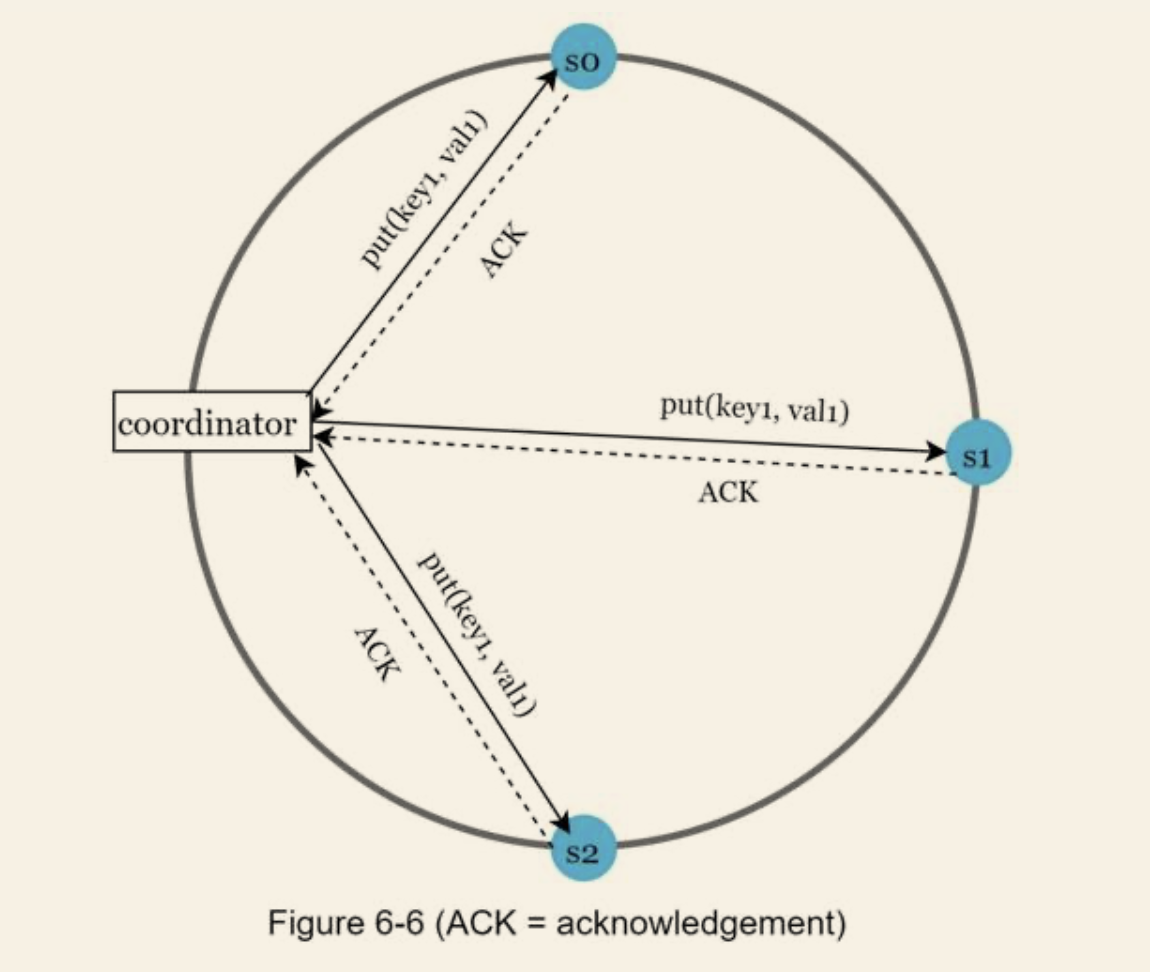

Data replication

- To achieve high availability, data must be replicated asynchronously

- Usually over the next N server clockwise from the consistent hashing of the servers

Consistency (Quorum consensus)

-

Definitions

- N = The number of replica

- W = For a write operation to be considered as successful, write operation much be acknowledged from W copies

- R = As above, but for reads

-

Possible setups:

- If R = 1 and W = N, the system is optimised for a fast read.

- If W = 1 and R = N, the system is optimised for fast write.

- If W + R > N, strong consistency is guaranteed (Usually N = 3, W = R = 2).

- If W + R <= N, strong consistency is not guaranteed.

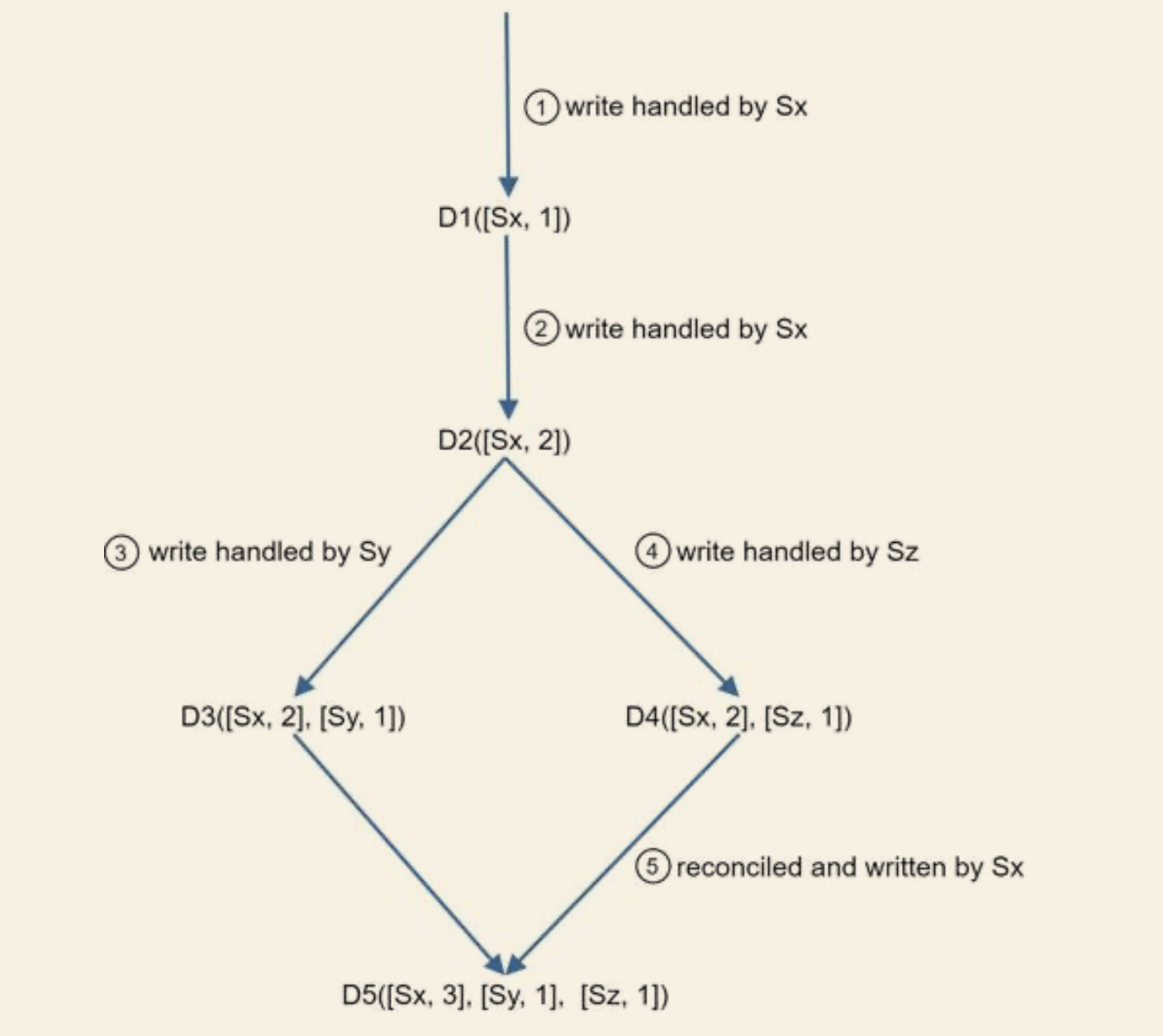

Inconsistency resolution: versioning

- Assume a vector clock is represented by D([S1, v1], [S2, v2], …, [Sn, vn]), where D is a data item, v1 is a version counter, and s1 is a server number, etc. If data item D is written to server Si, the system must perform one of the following tasks.

- Increment vi if [Si, vi] exists.

- Otherwise, create a new entry [Si, 1].

- If either the versioning or the servicer does not match, conflicts are identified.

- Downsides

- Vector clocks add complexity to the client, which requires the implementation of conflict resolution logic

- The server and version pair may grow rapidly.

- We can set a threshold length, if exceeds the limit, the oldest pair are removed

- May result inaccuracy in identifying conflicts, by based on a Dynamo paper, Amazon has not yet encountered this problem in production

Handling failures

Failure detection

Handling temporary failures

- "Sloppy Quorum"

- Instead of enforcing the quorum requirement, the system chooses the first W healthy servers for writes and first R healthy servers to read on the hash ring.

- Offline servers are ignored

- Hinted handoff

- For offline servers, all tasks are dealt according to consistent hashing cycle

- Once server back online, all changes will be pushed back to achieve data consistency

Handling permanent failure

Use Merkel Tree to identify which bucket (set of key-value pair) are not synchronised and synchronise those buckets only.

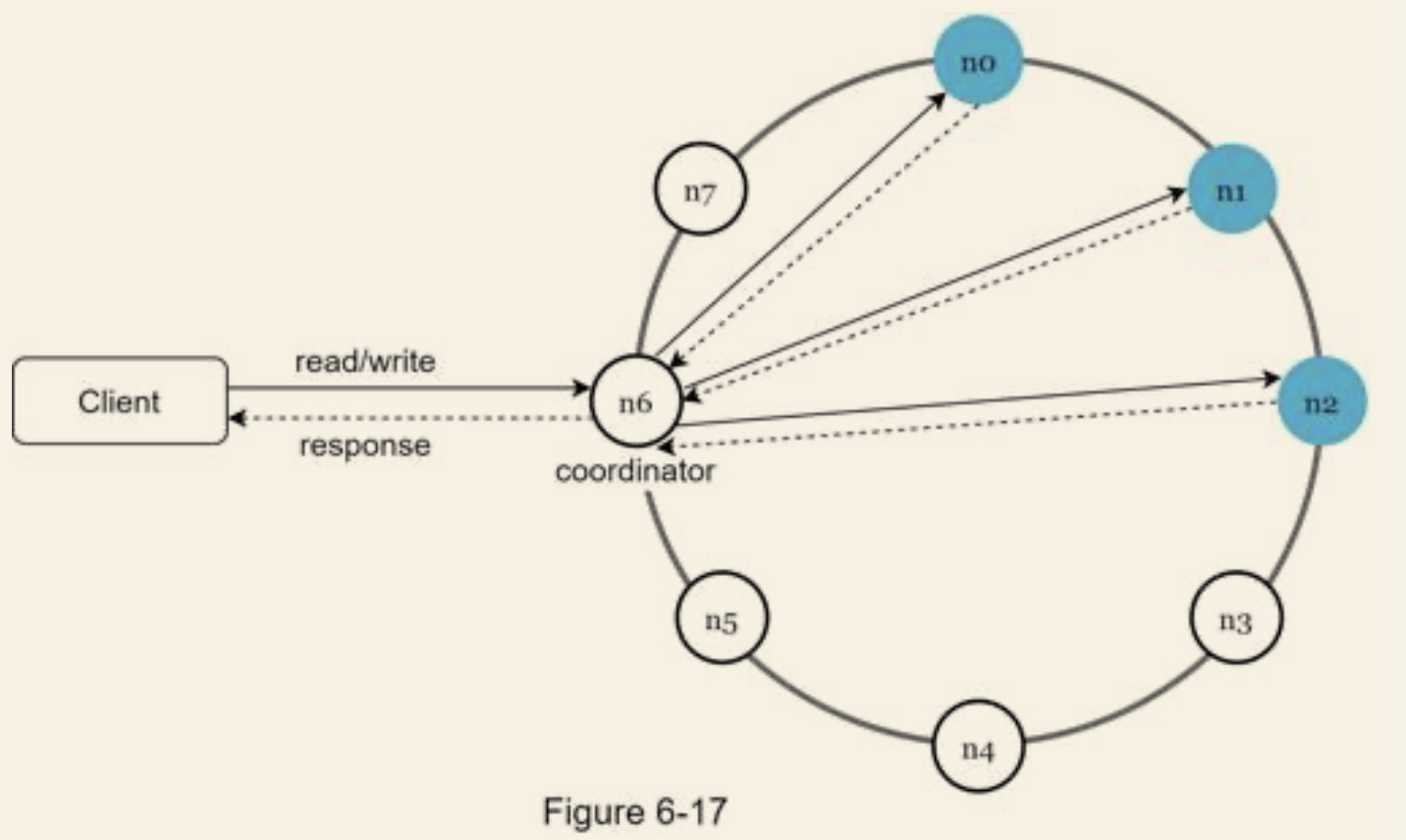

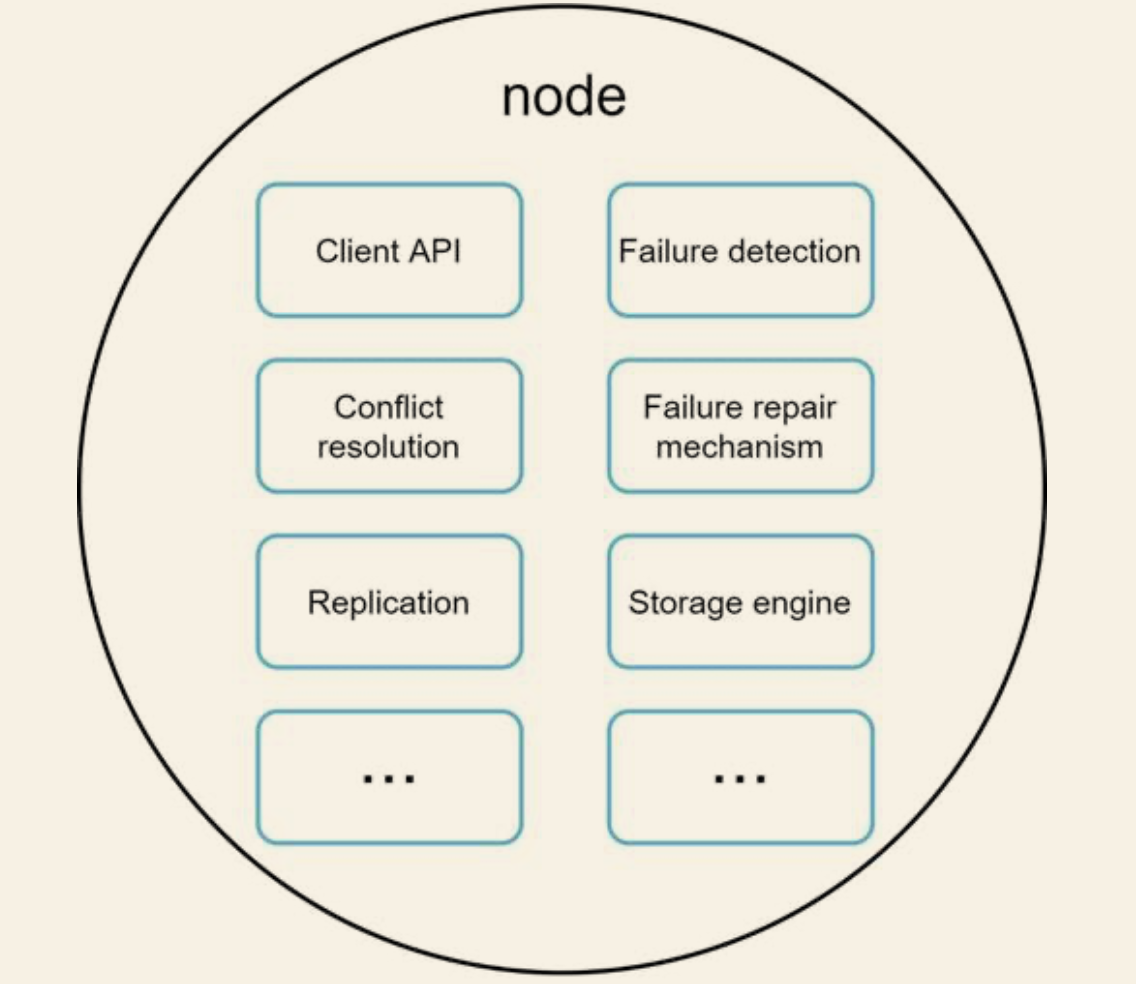

System architecture diagram

- Clients communicate with the key-value store through simple APIs: get(key) and put(key, value).

- A coordinator is a node that acts as a proxy between the client and the key-value store.

- Nodes are distributed on a ring using consistent hashing.

- The system is completely decentralised so adding and moving nodes can be automatic.

- Data is replicated at multiple nodes.

- There is no single point of failure as every node has the same set of responsibilities.

Write and read path

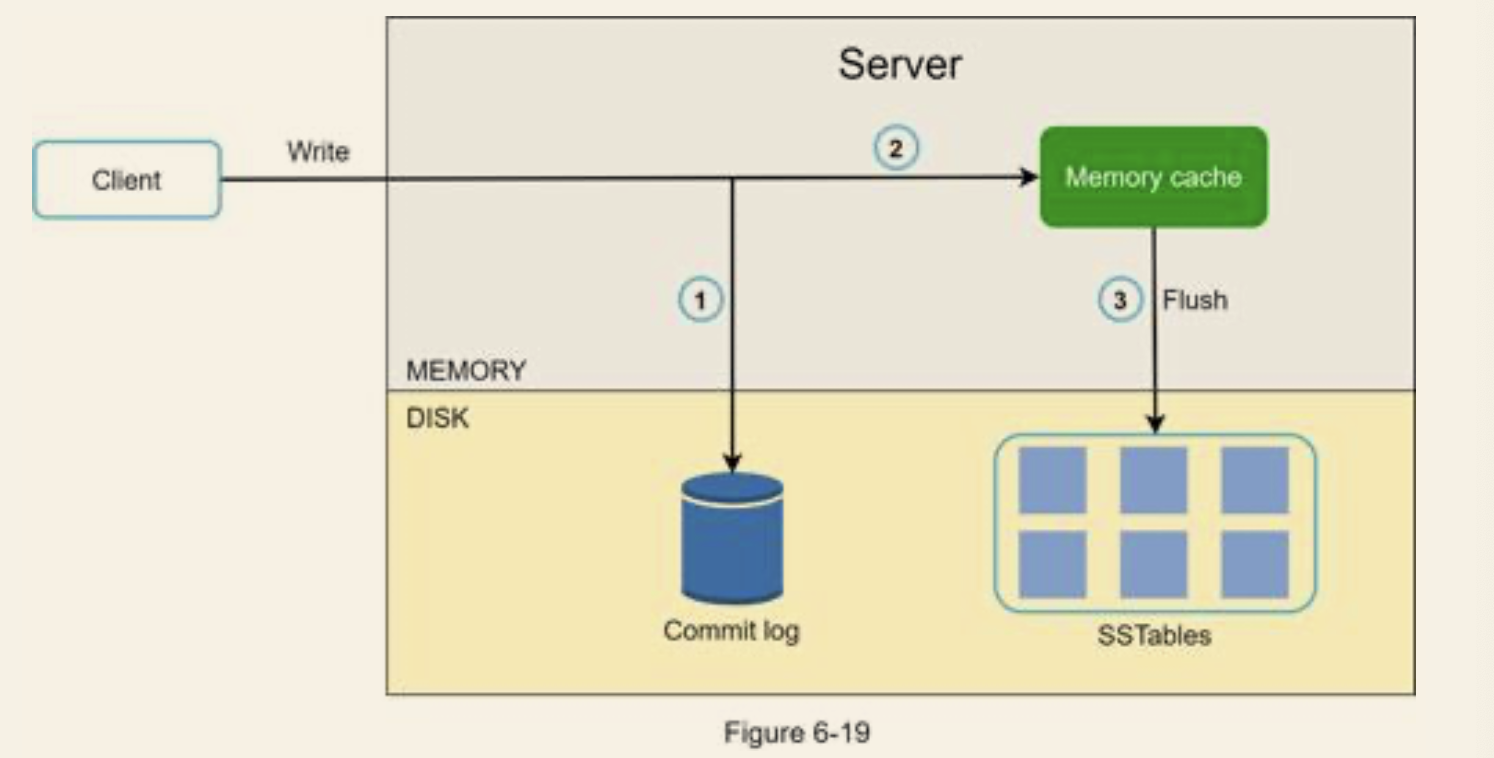

- The write request is persisted on a commit log file.

- Data is saved in the memory cache.

- When the memory cache is full or reaches a predefined threshold, data is flushed to SSTable [9] on disk. Note: A sorted-string table (SSTable) is a sorted list of <key, value> pairs. ”

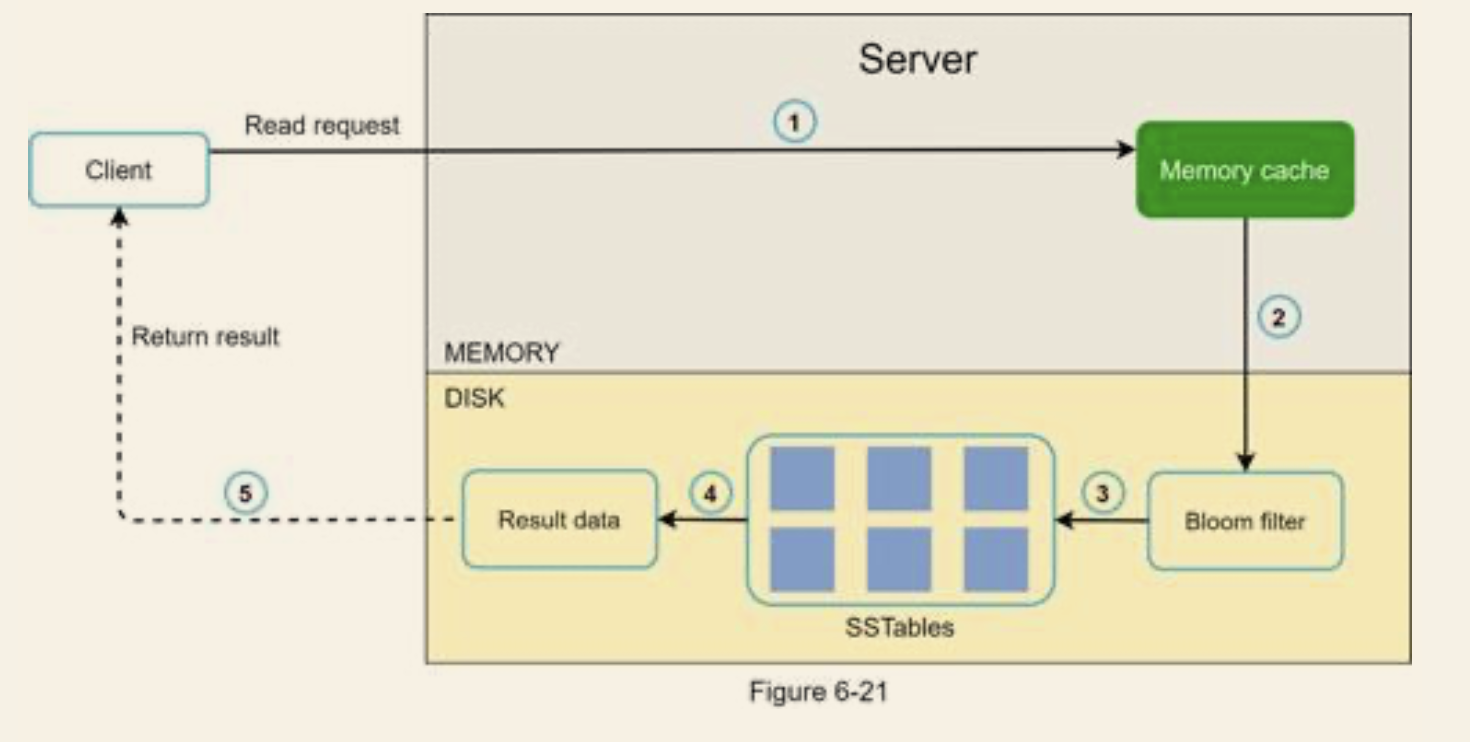

- The system first checks if data is in memory. If not, go to step2

- If data is not in memory, the system checks the bloom filter.

- The bloom filter is used to figure out which SSTables might contain the key.

- SSTables return the result of the data set.

- The result of the data set is returned to the client.