Application Layer

Principles of Network Applications

Client-server paradigm

- Server

- Permanent IP

- always-on host, often in data center

- Clients

- contract and communicate with server

- can be intermittently connected

- IP address can be dynamic

- Don't communicate directly with each other

- HTTP, FTP

Peer to peer architecture

- no always-on server

- arbitrary end systems directly communicate

- request and provide services mutually

- self scalability - new peers bring new service capacity and service demands

- peers are intermittently connected and change IP address

- e.g. P2P file sharing, blockchain

process communication

- within the host, process reply inter-process communication (defined by OS)

- for different host, communicate by exchanging messages

socket

- communication channel, can push and pop from it

- can be TCP and UDP

- identifiers

- 32-bit unique IP address, and port number

application protocol defines:

- Message type exchanged

- message syntax

- message semantics (meaning of information in fields)

- rules for process to send and receive messages

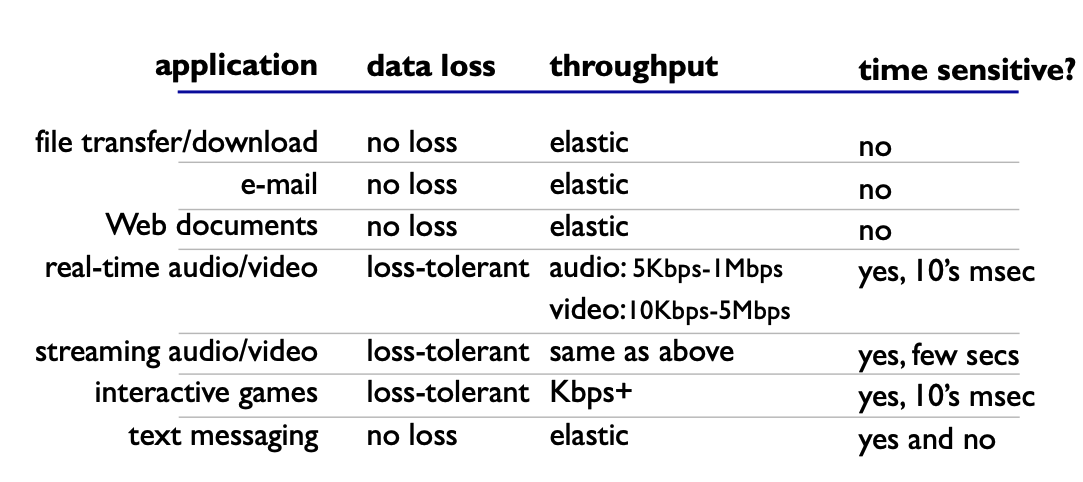

considerations when choosing app

- Data integrity

- tolerance to loss

- Timing

- delay

- Throughput

- Streaming has minimal throughput requirements

- Security

TLS - transport layer security

- provide encrypted TCP connections

Web and HTTP

- link object including HTML file, image, javascript

- Uniform Resource Locator (URL)

HTTP

- Hyper text transfer protocol

- Web's application layer protocol

- Stateless - maintain no information about past client requests

- HTTP request messages

HTTP 1.x header are all text

- Not most efficient

HTTP/2

- Transmission order for the requests object based on client-specific priority (not FCFS)

- Divide object into frames, and schedule frames to mitigate HOL blocking

- Break objects into smaller chunks, and interleaving these chunks for sending

- Can push unrequested objects to client

HTTP/3

- uses UDP using QUIC

HTTPS

- Connection encrypted by Transport Layer Security (TLS)

Cookies

- Maintain some state between transactions

- Four Components:

- Cookie header line of HTTP response message

- Cookie header line in next HTTP request message

- Cookie file kept on user's host, managed by user's browser

- Back-end database at website

Performance of HTTP

- Page Load Time (PLT) - from click to show of the page

- Can improve HTTP, cache, and horizontal scale the resources

- RTT - time for small packet to travel from client to server and back

- Non-persistent HTTP response time = 2 RTT + file transmission time

How to improve performance

- Concurrent requests and responses

- Not necessarily in order

- Overloading the server

- Reduce content size for transfer

- Persistent connection and pipelining

- Avoid repeat transfers of the same content

- Move content closer to the client

- Replication

- Replicate website across machines

- Spread the load across servers

- Expensive

- Replicate website across machines

- CDNs

- Cacheing and replication as a service

- Large scale distributed storage infrastructure

- Pull or push replications

- Dynamic content can also be handled

- Cacheing and replication as a service

- Replication

Persistent HTTP

- Leave TCP connection open after sending response

- Pipelining

- Client send req as soon as it encounters a referenced object

- Rather then only sending req after receiving previous resp

Cacheing

- Not for many unique requests (since dynamic content are increasing like video content)

- Web caches/proxy servers

- User make initial request to proxy server

- If cache miss, make request to original server

- COMP3311 cache calculations

- Conditional GET

- Don't send object if cache has up-to-date cached version

- In req, contain modification date

Electronic Mail

- User agents (mail reader)

- Mail servers

- mailbox for all incoming messages

- message queue of outgoing mails

- SMTP

- between mail servers to send email messages

- Uses TCP under the hood

- Three way handshake

- Message must be ASCII

- Command/response

- command: ASCII test

- response: status code and phrase

- Push protocol, which is different that TCP

- Persistent connection

- Multiple message sent in multipart, rather than each object encapsulated in its own resp message

DNS

- Distributed database implemented in hierarchy of many name servers

Functions:

- Map between IP address and host names

- Application layer protocol

- host aliasing

- Canonical name (CNAME), the true main host name

- Alias names, alternative names that maps to CNAME

- mail server aliasing

- route emails to correct mail server by Mail Exchange (MX) records

- load distribution

- load balancing to improve performance

- replicate web servers

Characteristics:

- Uniqueness of names

- Scalable

- storage

- handle large scale requests

- Distributed, autonomous administration

- Ability to update my own domain names

- Highly available

- Fast lookup

DNS Hierarchy

Hierarchical namespace

- From bottom to the top (unsw.edu.au)

H administered

- Root sever -> Top-level domain (TLD) -> Authoritative DNS servers

- Each server stores a small subset of the total DNS database

- Every server know root server

- root server knows about all TLD

- Root server

- If cannot resolve the name, it will reach root

- Managed by ICANN (Internet Corporation for Assigned Names and Numbers)

- Top-level domain (TLD)

- .com, .org, .au ...

- Authoritative DNS servers

- belongs to organisations

- Local DNS name servers

- Cache

- Timeout after reached TTL

- If host changes IP, cache might be outdated

- negative cache (things that don't work, prevent make the req again)

- Not strictly belong to the hierarchy

- Each ISP such as residence, company has one

- Cache

- Types of query

- Iterative, where the local DNS server make consecutive req starting from root, to its child... util find the result

- Recursive, where local DNS send req to root, and root would send recursive req to its chile

- Heavy load at upper levels

- DNS records

H of servers (distributed)

Reliability

- DNS servers are replicated, and load-balanced between replicas

- UDP is usually used

DNS security

- DDoS attacks

- man-in-middle

- intercept DNS queries

- DNS poisoning

P2P Applications

- Self scalability - new peer bring new service capacity

- Examples: BitTorrent, Crypto

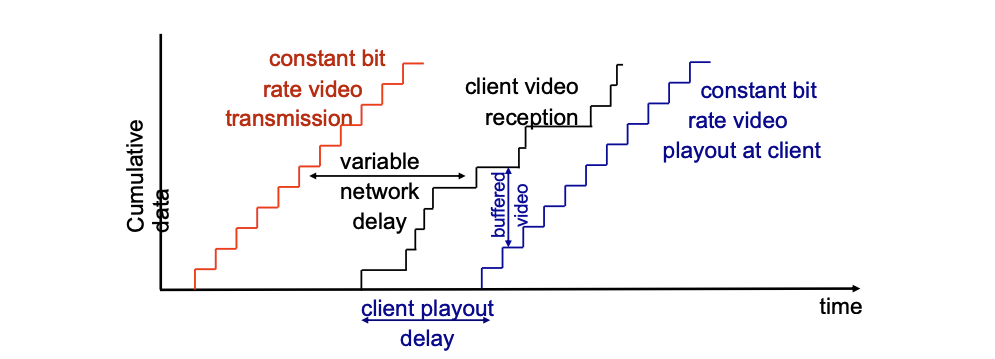

Video Streaming and Content Distribution Networks (CDNs)

- Videos are essentially array of pixels

Challenges

- Network delay are variable (need client side buffer)

- Packets might be lost

DASH

- Dynamic, Adaptive Streaming over HTTP

- Streaming video = encoding + DASH + playout buffering

Server

- Divide video file into multiple chunks

- Each chunk stored, and encoded at different rates

- Provide URL for different chunks

Client

- Periodically measure server-client bandwidth

- Request one chunk at a time

- Choose max coding rate sustainable given the current bandwidth

- can choose different coding rate at different points in time

- client determines:

- When to request chunk - avoid starvation

- What encoding - higher quality if bandwidth is sufficient

- Where to request - from URL server that is closer or has high available bandwidth

CDNs

- Stores copies of content at CDN nodes

- Subscriber request content from CDN

- Usually combination of push and pull CDN

Over-the-top (OTT) - delivery of content over internet without requiring user to subscribe to traditional cable, satellite...

The role of the CDN provider’s authoritative DNS name server in a content distribution network, simply described, is:

- to map the query for each CDN object to the CDN server closest to the requestor (browser)

Socket Programming with UDP and TCP

UDP

- No connection or handshake

- Sender attach destination IP address and port # to each packets

- transmitted data may be lost or receive out-of-order (unreliable transfer)

TCP

- connection must be established

- provides reliable, in-order byte-stream transfer between client and server

- 1 to 1 mapping between client and server side sockets and ports

- ! 200

- When server socket is busy, incoming connection request are stored in a queue (if queue is full, new req would be dropped)

Concurrency

- Parent process creates a welcome socket

- Once received a connection request, new process would be assign to service the connection socket, and the parent process would be free up to function as the welcome socket.